Leadership Development Programs For Teenagers

Evidence-based youth leadership programs using adolescent brain science, SEL & PYD to boost executive function, planning and academic outcomes.

Adolescent-Brain-Aligned Leadership Programs

We favor leadership programs that align with adolescent brain science. Programs tap high neuroplasticity and the slow maturation of the prefrontal cortex. They drive faster, longer-lasting gains in planning, impulse control and complex decision-making. Evidence supports programs that pair social-emotional learning (SEL) and Positive Youth Development (PYD) with scaffolded skills, short repeated practice, authentic responsibilities, trained mentors and measurable rubrics. Such approaches improve academics and behavior, but they require sufficient dosage to hold gains.

Key Takeaways

Design around adolescent neurodevelopment

Adolescence is a window of opportunity because of elevated neuroplasticity and a late-maturing prefrontal cortex. Design programs to train executive function and self-regulation through progressive, scaffolded practice rather than one-off lessons.

Proven impact

Interventions aligned with SEL and PYD show measurable benefits. Meta-analyses report roughly an 11‑percentile academic gain. Realistic targets for program planners are about 10–20% improvement or effect sizes near 0.2–0.3 across 6–12 months.

Core program features

- Clear, scaffolded progressions that build skills sequentially.

- Short, repeated practice cycles (micro-practice and short drills) to exploit consolidation.

- Authentic leadership responsibilities (real tasks with consequences) to support transfer.

- Trained adult mentors who provide coaching, feedback and safe challenge.

- Reflection and capstone projects to consolidate learning and demonstrate applied skills.

- Measurable rubrics that make progress visible and actionable for youth and staff.

Measurement essentials

Combine multiple methods to get a reliable picture of change:

- Validated surveys — for example, Rosenberg Self-Esteem, General Self-Efficacy, and youth leadership scales.

- Observable rubrics scored 0–4 for core competencies and behaviors.

- 360° feedback from peers, mentors and teachers/families.

- Behavior counts (e.g., office referrals, attendance, participation rates).

- Pre/post follow-ups at 3, 6 and 12 months to assess durability of gains.

Delivery, access and sustainability

Set a minimum viable dosage of about 20 contact hours over 6–8 weeks. For durable change, aim for approximately 60–120 hours across a year. Promote equity and long-term viability through:

- Sliding fees, transport and material supports to remove access barriers.

- Appropriate staffing ratios and investment in mentor training.

- Diversified funding and partnerships to sustain operations.

- Alumni pathways and continued roles that preserve gains and build leadership pipelines.

Why Leadership Development for Teenagers Matters

We design leadership programs with brain science as the starting point. Adolescence is a unique learning window because the prefrontal cortex matures into the mid-20s. That means planning, impulse control and complex decision-making are still developing. With higher neuroplasticity than adults, teens learn new self-regulation and decision skills faster and more durably.

Ineffective training wastes that window. Well-structured social-emotional work pays off. “When implemented well, SEL improves students’ social-emotional skills, attitudes, behavior and academic performance” (CASEL summary of SEL impact). Those gains show up in measurable metrics. For example, Durlak et al. (2011) — 11 percentile-point gain in academics after SEL programs. We use that evidence to prioritize proven practices, not fads.

Longitudinal youth research strengthens the case. Positive Youth Development (PYD) — 4-H Study / Lerner links PYD-style programming to higher civic participation, increased academic engagement and better mental health indicators. Investments in teen leadership align closely with PYD principles: strength-based development, progressive responsibility and supportive adult relationships.

Because teens’ brains are more plastic, training that starts in adolescence typically yields faster skill acquisition and longer-lasting behavior change than identical interventions begun in adulthood. That comparative advantage affects curriculum design, practice schedules and assessment choices. We set up frequent, short practice cycles and real-world leadership tasks so learning consolidates into habits.

Practical implications for program design

- Clear, scaffolded skills progressions that match developmental stages and demand incremental executive functioning practice.

- Short, repeated practice opportunities that leverage adolescent plasticity for faster learning.

- Real responsibilities and decision-making roles that transfer skills from simulation to life.

- Trained adult mentors who give specific feedback and model controlled decision-making.

- Measurable SEL outcomes and academic tracking to document gains like those noted by Durlak et al. (2011) — 11 percentile-point gain.

- Alignment with PYD principles from Positive Youth Development (PYD) — 4-H Study / Lerner to support long-term civic and mental-health benefits.

- Integrated reflection sessions so teens connect emotion, behavior and goal-setting—this strengthens self-regulation and leadership identity.

We encourage families and schools to look for programs that combine these elements. Explore our youth leadership program to see how we structure practice, feedback and responsibility to make leadership learning stick.

Core Competencies, Evidence-Based Activities & How to Measure Them

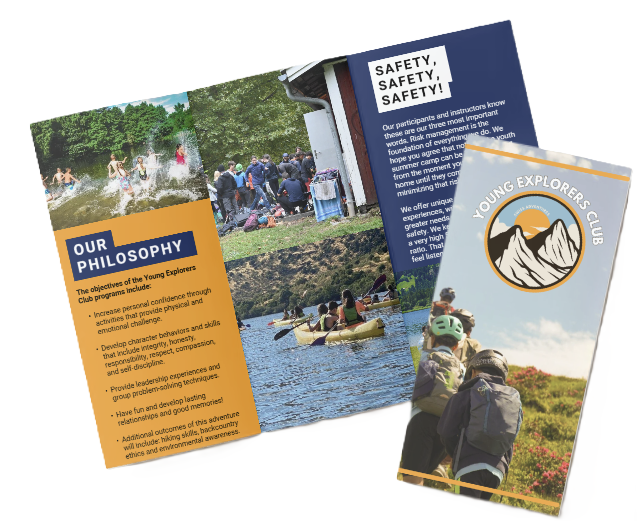

We, at the young explorers club, focus on ten teachable leadership competencies: communication, teamwork, problem-solving, decision-making, self-awareness, emotional regulation, conflict resolution, community engagement, ethical leadership, and project management. Each competency gets a clear behavioral definition and a 0–4 observable rubric with anchors so staff and participants rate the same actions.

Evidence-based activities mapped to competencies

Below are practical activity-to-competency pairings I use to build skills and collect evidence:

- Role-play scenarios — conflict resolution; ethical dilemmas.

- Service-learning projects — community engagement; project management.

- Peer teaching & mentoring — communication; teamwork.

- Problem-based learning challenges — problem-solving; decision-making.

- Reflective journaling — self-awareness.

- Youth-led planning committees — ethical leadership; facilitation.

- Public-speaking micro-challenges (2-minute impromptu) & project-pitch contests — communication; persuasion.

- Emotional-regulation practices (guided mindfulness + emotion-vocabulary practice) — emotional regulation.

We pair each activity with a short, observable checklist so coaches can code behaviors in real time.

Measurement instruments and metrics

The measurement approach combines validated scales, behavioral data, and qualitative reflections. Key instruments and metrics include:

- Rosenberg Self-Esteem Scale — for self-concept.

- General Self-Efficacy Scale — for perceived efficacy.

- Youth Leadership Life Skills Development Scale (or similar) — for leadership-specific growth.

- SEL measures referenced in Durlak et al. — for social-emotional learning benchmarks.

- Pre/post competency rubrics (0–4) with behavioral anchors.

- 360° peer/mentor feedback to triangulate observations.

- Behavior counts (attendance; leadership roles assumed).

- Coded reflection journals and qualitative case studies.

Suggested KPI targets & evaluation design

Suggested targets should be realistic and defensible. Typical benchmarks I use:

- 10–20% improvement in validated leadership measures over 6–12 months.

- Link that goal to the 11‑percentile achievement benchmark for SEL where applicable.

- Expect an effect size target of roughly 0.2–0.3.

- Adopt a mixed-methods design: quantitative pre/post surveys with a comparison group when feasible, plus qualitative case studies and focus groups for depth.

Practical measurement advice

Operational tips I apply in programs to keep measurement feasible and useful:

- Build observable rubrics (0–4 per competency) with clear behavioral anchors — for example: communication = delivers a 3-minute persuasive talk; uses active listening in group debriefs.

- Combine validated surveys (Rosenberg, General Self-Efficacy, Youth Leadership Life Skills Development Scale) with behavior counts and reflection journals for multiple data streams.

- Collect baseline data, then follow up at 3, 6, and 12 months.

- Use 360° feedback at midpoint to triangulate progress between self, peer, and mentor ratings.

- Strive for 70%+ retention in multi-week programs to preserve internal validity.

- For small cohorts, supplement quantitative targets with rich qualitative evidence from focus groups and coded reflections.

- Record simple behavior counts (attendance, leadership roles assumed) as low-burden, high-value indicators.

- Report effect sizes and confidence intervals alongside percentage-change KPIs to maintain defensible claims.

Explore program-level implementation examples in our youth leadership program to see these measures in action.

Program Models, Dosage & Sample Curriculum

We, at the young explorers club, group leadership pathways by setting and intensity. School-based models (Student Government, Peer Leadership) scale broadly and cost little per student, while out-of-school intensives—like NSLC, Outward Bound or NOLS—drive deep engagement but reach fewer youth and cost more per participant.

Programs and their typical profiles vary by type and purpose. Club and non-profit options such as 4-H, Girl Scouts, Boys & Girls Clubs and YMCA Youth & Government mix continuity and community service. Competitive and career-focused tracks—DECA, FBLA, Model UN, Key Club—emphasize applied skills and résumé-ready experiences. Civic or military-style programs like JROTC and Civil Air Patrol build discipline and civic knowledge. I recommend we pair program choice with desired outcomes: broad exposure or concentrated skill acceleration.

Timeframes and ballpark costs are predictable:

- Workshop: 1–3 hrs; free–$50 per student.

- Semester elective: 30–80 contact hours; nominal fees.

- Year-long club: 60–150 hrs; low–moderate cost.

- Residential/outdoor intensives: 7–21 days; $500–$3,000+.

Research-aligned dosage matters. Single workshops rarely change behavior. A minimum viable program equals about 20 contact hours spread over 6–8 weeks. For lasting change we recommend 60–120 contact hours across a school year. Intensive residential formats can accelerate learning with 40+ contact hours in 7–14 days, but they must include structured follow-up and coaching to sustain gains.

Core curriculum modules I use as the backbone are:

- Self-awareness & values

- Communication & public speaking

- Team dynamics & facilitation

- Conflict management

- Project planning & execution

- Civic engagement & ethics

- Career leadership & networking

Session length typically runs 60–120 minutes. Programs aimed at durable skill transfer work best over 6–12 months, though 8–12 weeks can seed growth.

Experiential design is mandatory for impact. I require a capstone team project with a public presentation, 10–40 community service hours per cohort per year, and a mentorship match goal of 1:5 mentor:mentee. Track these key metrics to evaluate progress:

- Total contact hours

- Retention rate

- Competency-rubric improvements

- Community service hours completed

For program examples and implementation tips, I point staff toward our youth leadership program guidance.

Sample 10‑Week Outline (practical weekly plan)

- Week 1: Values, self-awareness assessments, and goal setting; set cohort norms.

- Week 2: Communication basics—listening drills and feedback practice.

- Week 3: Public speaking practice with peer coaching and brief video review.

- Week 4: Team roles, facilitation techniques, and small-group simulations.

- Week 5: Conflict management role-plays and debrief frameworks.

- Week 6: Project ideation, stakeholder mapping, and scope setting.

- Week 7: Project planning—timelines, task allocation, and risk checks.

- Week 8: Project execution with midpoint instructor coaching and mentor check-ins.

- Week 9: Leadership in community—service learning kickoff and civic ethics discussion.

- Week 10: Capstone presentations, competency rubrics scoring, and celebration.

Access, Equity, Staffing & Training

We, at the Young Explorers Club, see equity and access gaps every season: lower-income and marginalized teens often miss out on leadership opportunities because of cost, transport and time. Logistical and financial barriers shrink participation and weaken program diversity. I prioritize policies that remove those obstacles and make programs feel relevant and safe for all students. Learn more about our youth leadership program as a practical model.

Best practices for inclusion

Below are high-impact practices I implement to increase outreach and retention.

- Offer sliding-scale fees and clear fee-waiver paths so cost isn’t the gatekeeper.

- Provide a transportation stipend or organized transit to ensure access across neighborhoods.

- Supply free materials, uniforms and meals to remove hidden costs.

- Use a culturally responsive curriculum and hire representative adult mentors who reflect participant backgrounds.

- Build partnerships with community groups such as Boys & Girls Club and 4-H to broaden reach and share costs.

- Center youth co-design / youth voice through steering committees and co-designed curricula to boost relevance and retention.

- Budget with local data: if 30% of district students qualify for free/reduced lunch, budget to ensure at least 70% of leadership program slots are free or subsidized for those students and pursue nonprofit partnerships to cover gaps.

Track participation barriers regularly and adjust supports based on what the data shows.

Staffing, qualifications and ongoing training

I staff programs to match activity type and risk. Aim for an adult: youth ratio of 1:8–1:12 for discussion settings and 1:4–1:6 for hands-on or higher-risk skill coaching. Choose staff with youth development experience, SEL training, trauma-informed practice and strong facilitation skills. Require background checks and cultural competence assessments before hiring.

I set clear training standards to keep quality high and reduce turnover. Expect initial onboarding of 16–24 hours covering safety, facilitation tools and equity practices. Schedule quarterly professional development of 4–8 hours focused on SEL, trauma-informed responses and curriculum updates. Use standardized facilitator guides and cross-training so staff can fill multiple roles when needed.

Measure inclusion with concrete metrics: compare participant demographics to district demographics and aim for proportional representation. Track barriers-to-participation categories (transportation, scheduling, fees) and report reductions over time. I use these metrics to guide recruitment, budget adjustments and partnership priorities.

Cost, Funding, Return on Investment & Sustaining Impact

Cost components and per-student ranges

I break down budgets around clear buckets so stakeholders see where every dollar goes. The core line items mirror typical program needs: cost components — staffing, facilities, transport, scholarships, evaluation — and I also include materials, marketing and assessment. Below I list the primary cost drivers and what to expect.

- Staffing: instructors, youth workers, program coordinators, and administrative support.

- Facilities: rental, utilities, insurance, and maintenance.

- Materials: curriculum, safety gear, technology, and consumables.

- Transport: buses, vans, fuel, and driver insurance.

- Scholarships: reduced-fee slots and full bursaries to ensure access.

- Marketing: outreach to schools, families, and funders.

- Assessment/evaluation: tools, external evaluators and data management.

I use realistic budgeting bands to set expectations: per-student cost ranges: $50–$400 (club); $500–$3,000+ (residential); $1,000–$2,500 (well-resourced program). Those figures help planners choose a model that fits mission and capacity.

Funding strategies, ROI framing and sustaining impact

We frame return on investment (ROI) in tangible outcomes. Translate program effects into cost-avoidance and funder value by linking reductions in disciplinary incidents, improved attendance and GPA, and higher post-secondary enrollment to saved school and community costs. Funders respond when you show how a leadership program reduces downstream expenditures and improves measurable outcomes.

We recommend tracking cost-per-outcome to prove impact. Useful measures include:

- Cost per percentage point increase in leadership score

- Cost per retained student

Diversify funding so a single revenue shock doesn’t cripple the program. Typical portfolios include municipal and foundation grants, corporate CSR partners, sliding-scale participant fees, school district allocations, and in-kind partnerships for space or transportation. Tap corporate partners for sponsorship of named cohorts or scholarships. Blend earned income with grants to stabilize cash flow and build donor confidence.

Sustaining impact requires deliberate alumni pathways. Build alumni networks, create internship pipelines, and keep mentorship continuation strong. Offer micro-credentials and digital badges to keep young leaders engaged and to signal value to schools and employers. I encourage linking program pages like our youth leadership program to alumni resources so participants see progression routes.

For measurement, track post-secondary enrollment, employment/internship placements, and continued civic engagement. Also set operational targets: track post-secondary enrollment; alumni survey response rate with a 60–80% contact retention goal. Aim to maintain contact for 60–80% of alumni and run an annual 5–10 minute outcome survey to capture trends without burning goodwill. Short, regular surveys give you actionable data for renewals and grant reports.

Operational tips I use to cut unit costs without harming quality:

- Leverage school facilities and bus contracts to reduce facilities and transport line items.

- Recruit trained volunteers and alumni as assistant staff to lower staffing expenses while boosting engagement.

- Package evaluation into grants so baseline and follow-up data are funded externally.

Presenting a funder-ready ROI narrative closes deals. Show the math: current per-student price, expected outcome gains, and the implied public savings over 3–5 years. Donors buy scaled, measurable impact. We position our programs to meet that demand and to sustain outcomes through alumni pathways and recognizable credentials.

Technology, Common Challenges & Quick Implementation Checklist

We, at the young explorers club, standardize a small stack of tools so staff focus on facilitation instead of tech. For live sessions we use Zoom, Google Meet or Microsoft Teams. For collaborative work we bring in Miro, Jamboard and Padlet. We gamify short reviews with Kahoot!. For project flow we use Trello or Asana and a classroom-appropriate Slack for messaging. For evaluation we rely on Qualtrics, Google Forms or SurveyMonkey. For realistic social-emotional learning practice we use Kognito. I reference CASEL, the Search Institute 40 Developmental Assets and Durlak et al. SEL measures when aligning assessments and learning objectives.

I design programs for blended delivery: asynchronous micro-learning modules followed by synchronous practice and debriefs. Use Kognito or similar for simulation-based SEL training and reserve live time for coaching and application. This mix cuts seat-time while keeping high-impact practice.

Keep data and digital readiness simple and clear. Checklist essentials include:

- Devices for participants and a device pool for equity.

- Stable connectivity and a backup low-bandwidth plan.

- Signed consent and parent permission forms.

- FERPA compliance and adherence to local data-protection rules.

- Secure survey platforms and encrypted storage.

- Clear data-retention and deletion policy.

Common challenges come up in every rollout. Here’s how we handle the usual blockers:

- Inconsistent attendance: run shorter modular sessions, offer flexible scheduling and make-up practices, and add small incentives tied to milestones.

- Lack of funding: diversify revenue with sliding-scale fees, sponsorships, and in-kind partnerships for supplies or space.

- Staff turnover: cross-train staff, keep standardized facilitator guides and offer reasonable stipends plus clear onboarding.

- Measurement difficulties: embed evaluation from day one, use validated instruments (see CASEL and Durlak et al.), and partner with local research institutions when possible.

I also build partnerships that improve access and logistics. Local schools, transport providers and community groups reduce barriers and improve retention. For program examples and recruitment framing see our youth leadership link on the site via youth leadership.

Quick implementation checklist and timelines

- Define goals & target metrics (attendance, behavioral outcomes, SEL scores).

- Choose program model and set contact-hour target (micro-modules vs full-day intensives).

- Set budget and per-student cost estimates; plan for contingencies.

- Recruit & train staff; plan 16–24 hours onboarding and practice facilitation.

- Plan curriculum (modules & hours) and decide delivery mode (blended recommended).

- Partner for equity/access (transportation, scholarships, device loans).

- Set up evaluation instruments (pre/post using CASEL/Search Institute/Durlak et al. measures) and a data-collection plan.

- Schedule follow-up and alumni tracking procedures.

Time-to-launch: pilot in 8–12 weeks for a simple test. A full school-year rollout generally needs 3–6 months of planning. Define goals; budget; recruit & train; evaluation; pilot 8–12 weeks; rollout 3–6 months.

Sources

CASEL — Core SEL Competencies / CASEL Framework

4‑H — The 4‑H Study of Positive Youth Development

Tufts University (Lerner Lab) — The 4‑H Study of Positive Youth Development

National Institute of Mental Health (NIMH) — The Teen Brain: Still Under Construction

Search Institute — 40 Developmental Assets

Boys & Girls Clubs of America — Research & Impact

Girl Scouts Research Institute — Research & Evaluation

Kognito — Simulation-Based SEL & Behavioral Health Training (Resources)

Qualtrics — Research & Survey Tools