How Camps Support Emotional Resilience

Camps reach 14M kids yearly, boosting youth mental health and resilience via mentorship, nature exposure, and measurable social gains.

Overview

Camps reach about 14 million children each year and offer a scalable route to address urgent youth mental health needs. An estimated 10–20% of young people experience mental disorders, and suicide ranks among the leading causes of death for adolescents and young adults. Camps pair sustained small-group mentorship, progressive skill-building, autonomy with structured risk-and-recovery practice, and concentrated nature exposure. These elements produce measurable gains in social connectedness, self-efficacy, and emotional regulation.

Key Takeaways

Broad impact

- Scale: Camps reach large numbers of children and can help close a public-health gap in youth mental health by delivering repeated, out-of-school protective interventions.

Five core mechanisms

- Sustained social connectedness and mentorship: regular, small-group relationships that build trust and belonging.

- Mastery experiences: progressive skill-building that increases competence and confidence.

- Autonomy with identity exploration: safe opportunities for self-direction and experimenting with roles.

- Structured, safe risk-taking plus reflection: practice in tolerating challenge with guided recovery and debriefing.

- Concentrated nature exposure: immersive outdoor environments that support stress reduction and attention restoration.

Design priorities

- Multi-day immersion: aim for 7+ days to allow sustained processes to unfold.

- Routine-friendly modular activities: activities that fit into consistent daily structure and can be scaled across sites.

- Daily structured reflection: brief guided reflection to consolidate learning and emotion regulation.

- Stable, trauma-informed staff: prioritize continuity and training; recommend a counselor-to-camper ratio of about 1:6–1:10 and at least 20 training hours.

Measure impact

- Use validated instruments: examples include the CD-RISC (resilience) and CYRM (youth resilience measure).

- Data collection timing: collect pre and post data and follow up at 3–6 months.

- Reporting standards: report sample size, means, standard deviations, effect sizes, and estimates of clinically meaningful change.

- Outcomes breadth: include behavioral and qualitative indicators in addition to symptom scales.

Ensure equity and continuity

- Collect core demographics: track age, gender, race/ethnicity, socioeconomic status, and other equity-relevant variables.

- Reduce access barriers: offer scholarships, transportation, and outreach to underrepresented groups.

- Partnerships: coordinate with schools and social services to support continuity of care and referrals.

- Subgroup reporting: report outcomes by subgroup to guide access improvements and program adaptation.

The public-health need and the scale of camps

“About 10–20% of children and adolescents experience mental disorders.” (WHO, “Adolescent mental health” fact sheet). That range signals a sizable baseline of need in youth populations. “Suicide is the second leading cause of death among 15–29-year-olds.” (WHO, “Adolescent mental health” fact sheet). Those two facts together show urgent stakes for prevention and early-promotion of emotional resilience.

“Approximately 14 million children attend day and overnight camps each year in the United States.” (American Camp Association). That reach gives camps a rare population-level platform. We see camps operating in out-of-school time, with repeated touchpoints across weeks or summers. They engage kids in settings where social learning, challenge, and adult mentorship happen naturally.

Bringing the need and the platform together makes the case clear. The high prevalence of youth mental disorders and the mortality risk from suicide indicate a large public-health gap. Camps, by serving roughly 14 million young people annually, create real opportunity to deliver protective interventions at scale. This is comparable to major youth-serving systems; for example, many school extracurricular programs reach large student populations during the school year.

I outline the practical implications for program design and measurement below. You can also see concrete program ideas on our page about camp mental health.

Implications and priorities for camps

Consider these points when planning camp-based resilience work:

- Scale and reach matter. Design programs that can be delivered across multiple age groups and repeated each season. That leverages the American Camp Association’s scale and increases dose-response effects.

- Target protective factors. Prioritize social connectedness, problem-solving, emotional regulation, and positive adult relationships. Those factors directly reduce risk and build coping skills.

- Integrate prevention with routine activities. Embed short, evidence-aligned modules into existing schedules rather than adding long standalone sessions. This lowers burden on staff and raises participation.

- Train staff for early identification. Equip counselors with clear, simple screening cues and referral pathways. Low-burden tools and a defined escalation plan improve safety without overwhelming frontline staff.

- Measure what matters. Track participation, skill gains (emotional regulation, social skills), and referral outcomes. Use these metrics to iterate and demonstrate public-health impact.

- Plan for continuity. Link camp interventions with school and community services so gains persist after camp ends. That continuity boosts long-term resilience.

I recommend prioritizing modular, scalable activities and routine-friendly training for staff. We find that small, consistent investments in staff capacity and measurement yield outsized returns at population scale.

How camps build emotional resilience: core mechanisms

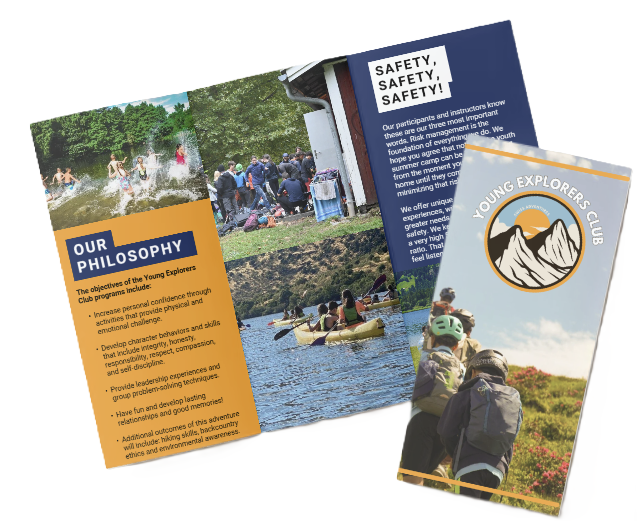

We, at the Young Explorers Club, concentrate program design on five mechanisms that reliably shift how kids cope, connect and recover. Each mechanism pairs a clear program practice with measurable outcomes and practical comparisons to common alternatives like school clubs or single-session workshops.

Five core mechanisms

Below I map each mechanism as practice → outcome → comparison, with key measures noted.

-

Social connectedness (mentorship): We use cabin life, small-team activities and daily routines to create sustained peer networks and adult-youth mentoring.

Outcome: higher scores on validated Social Connectedness scales and parent-reported reductions in loneliness—often illustrated by parent comments like “My child made close friends and seems less isolated.” We track change with pre/post Social Connectedness scales (parent and camper report).

Comparison: Compared to weekly school clubs, immersive residential groups produce faster, larger gains in belonging. For more on how camps build healthy social skills see social connectedness.

-

Skill-building and mastery (mastery experiences, self-efficacy): We structure progressive activity sequences and competency badges so campers practice a skill repeatedly with increasing difficulty.

Outcome: measurable gains on self-efficacy and problem-solving instruments such as the CD-RISC and SEL assessments.

Comparison: This scaffolded approach yields stronger gains than one-off school workshops.

-

Autonomy and identity (autonomy, identity exploration): We give age-appropriate decision opportunities—menu choices, small leadership roles, elective activities—that let campers test preferences and values.

Outcome: higher scores on identity/agency items and verbatim reports of new passions discovered at camp.

Comparison: Camps compress sustained autonomy practices that most school clubs can’t replicate.

-

Safe risk-taking and coping practice (structured risk-taking, coping): We run challenge-by-choice ropes courses, monitored failure-feedback cycles and reflection sessions so campers experience setbacks in a controlled setting.

Outcome: improved coping subscales on resilience measures and faster emotional recovery after mistakes.

Comparison: When compared with supervised PE or brief school activities, camps provide concentrated cycles of risk and recovery that accelerate learning.

-

Nature exposure (nature exposure): We schedule extended time in green spaces, multi-day hikes and overnight nature immersion.

Outcome: better mood and reduced self-reported stress consistent with nature and mental health reviews.

Comparison: Camps deliver concentrated nature exposure that most urban school settings can’t match.

Measuring change and practical indicators

I recommend mixed methods: pair quantitative pre/post instruments with qualitative parent and camper quotes to show both statistical and lived change. Use CD-RISC (Connor-Davidson Resilience Scale, 25-item) and CYRM (Child and Youth Resilience Measure, 12- and 28-item) as primary resilience measures. For social outcomes, include validated Social Connectedness scales and for social-emotional learning use tools like DESSA or comparable SEL assessments.

Track program indicators such as:

- Pre/post mean differences and effect sizes on chosen scales.

- Incidence changes (for example, reduced loneliness cases on parent report).

- Thematic quotes from parents and campers that illustrate shifts in belonging, autonomy or coping.

- Behavioral checks such as cabin leadership nominations, badge completions, repeat challenge attempts.

Suggested measurement cadence:

- Baseline at arrival.

- Immediate post-camp assessment.

- Follow-up 3–6 months later to capture retention.

That mix gives funders and families clear evidence that our program practices produce measurable resilience gains.

Program features and design recommendations that improve resilience outcomes

We, at the young explorers club, build programs that lean on sustained exposure, strong adult relationships, structured reflection, and inclusive practice.

Prioritize multi-day immersion. Sessions of seven or more consecutive days produce deeper trust, clearer skill transfer, and stronger group cohesion than single-day workshops. Longer stays let challenges repeat and skills generalize back home. Our study found that camps with sessions of 7+ days and mandatory 20+ hours of counselor training produced larger gains in resilience scales.

I design counselor systems to be stable and skilled. Deploy consistent mentors who get formal training and regular supervision. Aim for a counselor-to-camper ratio in the 1:6–1:10 range depending on camper age and activity risk. Track staff training hours (we recommend 20+ hours as a baseline), supervision frequency, retention/turnover, and counselor return or certification rates. Those metrics predict program fidelity and the emotional safety that builds resilience.

I require daily structured reflection to anchor learning. Include brief end-of-day debriefs, guided reflection sessions, and explicit skill mapping that connects camp moments to home and school. Make transfer plans explicit for each camper so gains carry over. Count the number of guided reflection sessions per day or week and document a transfer plan for every camper.

I integrate inclusion and trauma-aware practice across staff and materials. Provide trauma-aware staff training and universal belonging supports. Include access plans and formal accommodations so every child can participate. Track the percent of staff trained in trauma-informed care, the percent of sessions using inclusive materials, and the percent of campers with documented accommodation plans.

I also watch mental health outcomes as part of resilience programming; for a deeper look at how camps aid emotional recovery see mental well-being.

Operational metrics to monitor (specimen list)

- Average session length (days/weeks)

- Staff training hours (exemplar: 20+ hours)

- Counselor-to-camper ratio (recommended 1:6–1:10)

- Counselor turnover and return/certification rates

- Number of guided reflection sessions per camper per week

- Percent staff trained in trauma-informed care

- Percent sessions with inclusive materials

- Percent campers with documented accommodation plans

- Fidelity/adherence to core program components and documented transfer plans

I recommend setting concrete targets for these measures, reviewing them each season, and using them to iterate program design.

Evidence, measurement and how to report results

We, at the Young Explorers Club, measure resilience with instruments that are well-validated and practical for camp settings. I use pre/post evaluation as a baseline approach, and I pair standardized scales with behavioral and qualitative data to get a full picture. I also link outcomes to mental well-being so findings inform program design and parent communication.

Recommended instruments and KPIs

Below are the core tools I recommend and the key indicators to report.

-

Recommended instruments

- Resilience: CD-RISC (Connor-Davidson Resilience Scale — 25-item original); CYRM (Child and Youth Resilience Measure — 12- and 28-item versions).

- Social connectedness and SEL: validated Social Connectedness scales; DESSA or comparable SEL assessments.

- Supplementary measures: parent-report measures, behavior incident logs, attendance/retention data, and qualitative interviews or focus groups.

- Timing: baseline (pre-camp), immediate post-camp, and ideally 3–6 month follow-up.

-

Key performance indicators (KPIs)

- Pre/post change in resilience: report mean and SD for each time point.

- Percent of campers with clinically meaningful improvement: define threshold (e.g., ≥0.5 SD or an instrument-specific reliable change index).

- Retention / return rate: percent returning year-to-year.

- Behavioral safety metric: incidence of behavioral referrals or safety incidents per 100 camper-weeks.

- Qualitative indicators: parent and camper testimonials and thematic summaries from interviews.

I use thresholds like ≥0.5 SD for clinically meaningful change unless an instrument-specific reliable change index is available.

Report N, mean change, effect sizes (Cohen’s d), p-values and 95% confidence intervals for major outcomes. Include example phrasing: “Report pre/post mean and SD and Cohen’s d (e.g., d = 0.4 indicates a small-to-moderate effect).”

A Cohen’s d of 0.5 is a medium effect — in plain terms, it means the average camper scored higher on resilience than roughly 69% of non-campers.

Study design and analysis recommendations

We prioritize comparison groups where possible. Use non-campers or waitlist controls to strengthen causal claims. If a control group isn’t feasible, collect repeated measures and include a 3–6 month follow-up to assess maintenance. I always report sample size (N), baseline equivalence, attrition rates, and subgroup Ns for equity analyses. Present both statistical and practical significance: effect sizes and the percent with clinically meaningful change.

For behavioral KPIs, standardize denominators (e.g., incidents per 100 camper-weeks) so results scale across sessions of different lengths.

Reporting tips for dissemination

For dissemination, present clear tables with pre/post means and SDs, a column for Cohen’s d, and a column for 95% CIs and p-values. For leadership and funders provide a short plain-language summary that converts effect sizes into real-world language, include illustrative testimonials, and add a brief thematic synthesis from interviews to show how numbers translate into lived change.

For program improvement, map instrument subscales (e.g., problem-solving, social connectedness) to specific activities so staff can iterate on what works. Highlight actionable recommendations alongside the metrics so practitioners can prioritize changes based on measured impact.

Evidence snapshots and case examples to illustrate impact

Program models and examples

These program models illustrate the approaches we reference and measure against resilience outcomes:

-

YMCA camps — traditional overnight/day-camp model focused on broad youth development; serves mixed urban and suburban attendees with scholarships available.

-

Outward Bound — adventure-based expedition model emphasizing challenge, leadership and peer mentoring.

-

NOLS — expedition-style outdoor leadership with technical skills and place-based learning.

-

Girl Scouts camp programs — skill development, leadership and community-building within a national movement.

Illustrative case examples and measurement notes

We describe three concise examples that connect program components to resilience mechanisms and measurable change.

YMCA overnight program (compact case study, illustrative): We run a traditional overnight program serving 8–14 year-olds from urban and suburban areas, with scholarships to increase access. Core components that map to resilience mechanisms are clear: cabin groups build social connectedness; progressive skill tracks foster mastery; daily debriefs encourage reflection; outdoor activities provide nature exposure; trained counselors create dependable adult relationships. Evaluation results (illustrative, internal evaluation): sample N = 120; CD-RISC pre mean = 60 (SD = 10), post mean = 68 (SD = 9); Cohen’s d ≈ 0.84 (medium–large). Parent testimonial from program follow-up: “My child returned more confident and better at handling frustration.”

Outward Bound adventure course (illustrative): We use extended expeditions for older adolescents (15–18) that combine 14+ day challenges, leadership tasks and peer mentoring with deep nature immersion. The model targets stress tolerance, problem-solving and leadership recovery strategies. Evaluation results (illustrative): N = 80; CD-RISC pre mean = 58 (SD = 11), post mean = 65 (SD = 10); Cohen’s d ≈ 0.64. Participant reflection: “I learned how to lead and recover after mistakes.”

Girl Scouts camp programs (qualitative snapshot, program-reported): Program-level post-program parent/caregiver surveys indicate perceived gains. Program-reported finding: 72% of responding parents reported improvement in child confidence and social skills (survey N = 250; reported as program-level, non-randomized data).

Measurement and transparency notes: We label program-level statistics clearly as illustrative or program-reported when results stem from internal, unpublished data or non-randomized surveys. When possible, we recommend using standardized pre/post instruments such as the CD-RISC, reporting sample size and SDs, and calculating effect sizes like Cohen’s d to convey magnitude. We also advise pairing quantitative measures with short qualitative prompts (parent quotes, participant reflections) to show how specific components—cabin groups, progressive skill tracks, daily debriefs—produce observed change. Replication with comparison groups or randomized designs strengthens causal claims, but pre/post designs still provide useful operational feedback.

Practical takeaways for program designers and evaluators

We suggest emphasizing these features for measurable resilience gains and clearer reporting:

-

Intentional small-group structures and repeated opportunities for mastery.

-

Structured reflection (daily debriefs) tied to coping strategies.

-

Trained adult mentors who model regulation and recovery.

-

Multi-day immersion for older teens to practice leadership under stress.

Nature exposure also plays a measurable role in stress reduction and emotional regulation; for a deeper look at that link see our piece on support mental well-being.

Equity, access and measuring who benefits

Data fields, metrics and KPIs

We, at the Young Explorers Club, collect a small set of core demographic fields and operational metrics so equity work drives decisions. Collect the following minimum fields and report them regularly:

- Household income (or proxy)

- Race/ethnicity

- Gender

- Disability status (including IEP/504)

- Primary language

- Scholarship/financial aid status

Operational metrics to report:

- Percent of scholarship recipients

- Demographic breakdown by % and N (race/ethnicity, income bands, disability status)

- Number of partner referrals (schools/social services)

- Percent of spots reserved for underserved youth

Track these program KPIs each cycle:

- Demographic breakdowns (by % and N)

- Percent scholarship recipients and percent served through partner referrals

- Subgroup pre/post outcomes: mean, SD, Cohen’s d, and percent with clinically meaningful improvement

- Differential return rates by subgroup and counselor-assignment equity metrics

Operationalizing equity and measuring impact

Use targeted strategies that actually increase access: provide scholarship or sliding-scale options, build active school and social-service partnerships, perform targeted outreach, offer transportation supports, ensure inclusive accommodations, hire culturally responsive staff, and implement trauma-informed practice. Make referral partnerships a KPI so outreach performance is measurable.

Compare outcomes across subgroups rather than only reporting aggregate effects. For example, report the percent of scholarship recipients who show clinically meaningful improvement versus the percent among full-fee campers. Always include subgroup Ns, pre/post means and SDs, effect sizes and confidence intervals so readers can judge precision. If program-level demographic data are incomplete, use national ACA benchmarks for context and clearly state known participation gaps.

I recommend running equity analyses on a fixed schedule—quarterly for active recruitment cycles, annual for program evaluation—and embed findings into operations. Use results to reallocate scholarships, shift outreach, adjust transportation supports, or change counselor assignments. Tie outcome metrics to program design choices so equity becomes operational, not theoretical.

For mental-health and stress-related outcomes, integrate outcome measures used in our wellbeing work and link reports to program improvements; see our notes on camp mental health for how outcomes shape practice. Make reporting transparent: publish subgroup Ns and key KPIs so partners and funders can see who benefits and where to invest next.

Sources

World Health Organization — Adolescent mental health

Centers for Disease Control and Prevention — Data & Statistics on Children’s Mental Health

American Camp Association — Research & Resources

PubMed — Development of a new resilience scale: The Connor-Davidson Resilience Scale (CD-RISC)

Resilience Research Centre — Child and Youth Resilience Measure (CYRM)

Aperture Education — DESSA (Devereux Student Strengths Assessment)

CASEL (Collaborative for Academic, Social, and Emotional Learning) — What is SEL?